Application Experience (AppX) for DNA Center was my major focus as a UI/UX designer during my first one and half years with Cisco Enterprise Networking division. This is a large scale project that consists of provisioning, analytics and several diagnostic tools for application experience monitoring and troubleshooting for enterprise customers.

Process

Using a variation of the popular Design Thinking framework, our process for UX design can be described as divided into 3 phases:

- Inspiration: The main theme is "Understand" and "Emphasize": Understand the domain, product, market, and emphasize with the end users, usually with generative user study and competitive analysis. Building emphay and compassion for the end users is the very first step to achieve the more ambitious end goal of "Making it simple and approachable";

- Ideation/Validation: The theme is "Imagine", "Tink", and "Iterate". Many design artifacts are produced as a result of collaboration between the cross functional teams, and validated with the customers: Miro dashboards (typically user journey maps, object graphs, and information flow maps, etc.), Balsamiq wireframes, Figma sketches, Invision prototypes, and occasionally web app prototypes. Typically during this stage, the project moves across several sub-phases, starting from PM definition, to UX definition, concept commit ready, and then to engineering commit ready, before it's complete. For smaller projects, we run generative and evaluative user studies on prototypes with external and internal customers; For large features, we work with UX research team to understand the big picture: the context and use cases, or look across features to discover common patterns.

- Implementation: The theme is

Make it Work, Make it Right/Simple, and Make it Fast

. Solution design requirements and detailed designs are captured in Rally stories, and Confluence wiki pages; feedback is provided to the Cohesion design system, as a result, new design patterns for the platform may be authored for the future release. Project status is updated in the control systems; After Design QA is run, key stakeholders will approve the final product features per acceptance criteria, and the code is merged into the product for the targeted release.

Qalitative User Research

Qualitative user research has been done either in the ad-hoc, “guerrilla” fashion or rather large in scope depending on the size of the project, and the available resources at the time.

- Larger scale user research has been carried out with our internal UX research team, or a third-party agency. At the planning stage, the researchers and the product team, including PM, UX, and engineers, sit down to figure out the goals, screening requirement (including the sample size), as well as the methodologies. The research team then provide the research protocols (scripts). Most of the research is first about open-ended, contextual inquiries, following by moderated, prototype click-through testing, with either explicit or implicit user tasks, and lastly by asking about user preferences and sentiments. This type of research typically takes a couple of months, and is aimed to provide insights for the problems to solve for an agile epic.

- Ad-hoc user research is typically done with our large account users, with our product managers demoing our mockups designed for the next Sprint. This is to make sure we are solving the problems in the right way.

- We also periodically gather feedback from our internal users, Cisco IT, and our technical marketing engineers. I really enjoy the freestyle, face-to-face interaction with my coworkers (i.e., our internal users).

Challenges and opportunities for UX

- For an ambitious project such as Application Experience, we quickly saw how important it was to address the following problems:

- A shared vision of North Star among the cross functional teams (PM, UX and engineering)

- Once the team see the North Star, how can they hit the ground and running

- Build a holistic UX for the end users; Tell a coherent story to the stakeholders: Historically, there have been many different features built by different teams at different times; and the landscape keeps changing

- We don't have a formal framework to discover requirements and evaluate designs and products; we also don't have any formal guideline for dealing with "master specs": Does the UX mockup for a specific Rally story need to repeat all the existing specs in the flow? Nor do we have guidelines as to whether UX mockup exists to specify the detailed content on each screen, such as all the KPIs/dashboards on a screen, copy for tooltips, and copy for individual columns in a table;

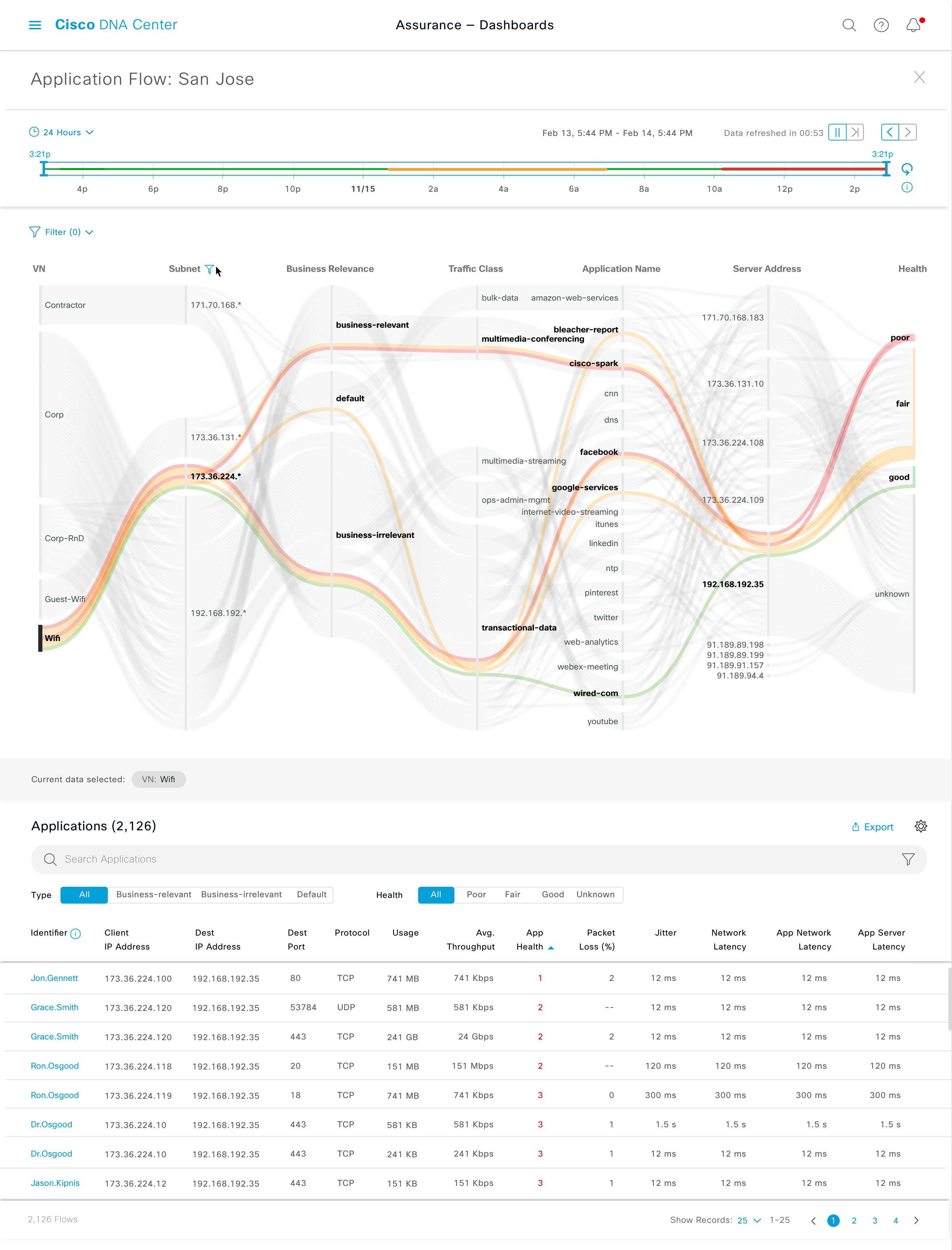

- We use exploratory data visualization [Example 1: Site health] [Example 2: App flow] [Example 3: App health distribution] in the early stage of development to back storytelling, requirement discovery, and later to help narrow down design scopes and options (e.g., design optimization and validation: "Will my chart design able to fit/convey the shape of my data?") This has helped designers provide authentic UX for the product.

Brownfield Design

Many of the features in AppX is part of the long term vision with the earlier development stretching 2~4 years back. The followings are some of the challenges and practices I observed in design for Brownfield:

- Incremental improvement and continuous integration. This is mostly reflected in the contrast between the ideology of bringing the team along with our UX journey, with a phased approach, eventually reaching our goal of the "North Star", and the harsh reality of stopping short at the "1.0" design in the product.

- "Expert system" dilemma. This is a classical UI design problem: do we design with many knobs and dials in the UI that reflect and control the intricacies and nuances of the inner workings of our tools, or an "intent-based" system (which considers the end user the user of our tools and conveys the context of the problem from the perspective of the end user), or something in between? The other interpretation of an "expert" system is one that will solve the problem for the user instead of together with the user or used by the user. To build a good UX that solves the problems for the users, the arguably the most important, and hardest step is to translate all the insights and the inner workings of the system into the user's intent.

- "Modularization" Dilemma. The "vertical" silos become obvious when modules are supposed to have dependencies on one another, which somehow we failed to see when we design the system; or worse, it was by design from the beginning and continues to be that way as the system evolves.

- "Optimize locally" vs. "Optimize globally." Every feature team are trying to seek the optimal solution for the problem they are assigned to solve, and can unintentionally override the other parts of the system, or even undermine the performance of the system as a whole.

- "Consistency". The following is my favorite quote by John Tukey, the designer of box plot and FFT, on the principle of

feeling free to be inconsistent

:

In the spirit of "form follows function", this makes sense. So it's interesting to realize that in practice, we are alternating between "being consistent" and "being efficient" when it comes to evaluating a design: and more often than not, we started by looking up seemingly similar screens in earlier releases and use them as reference design, and tried to generalize them to the new problems at hand. This approach has the risk of premature generalization, but effective for the design committee to quickly reach to a consensus.We have tried to use consistent techniques whenever this seemed reasonable, and have not worried where it didn't. Apparent consistency speeds learning and remembering, but ought not to be allowed to outweigh noticeable differences in performance.

— John Tukey

A Basic Checklist for UI Design

- What questions are we answering in our UI? (What)

- What benefits the user when they use it? (Why)

- What questions are we asking? What do we expect the users to do? How should the UI interact with the user? (How)

In the case of Application experience, the users what to see how their business critical applications perform; that means they want to be able to tweak the health score per application; that also means that they want to be able to compare the application of their interest with the rest of the applications in the same traffic class, and the similar applications in the comparable networks; and see all these data by looking at changes over time (historical and in the future). Among the questions we are trying to answer are:

- "How does my app perform compared to similar apps in comparable networks?"

- "How does my app perform compared to historical conditions of similar apps in the same network?"

- "Will I be notified when my business critical applications under perform?"